Animal behaviors are vastly diverse, from dexterous leg movements of a fly when foraging for food or the acrobatic swing of a macaque between trees. To understand the neural basis of these behaviors, neuroscientists have long pushed the frontiers to find descriptors of these motions with increasing fidelity. In this respect, modern neuroscience is increasingly relying on 3-dimensional (3D) pose tracking, i.e., by following the coordinates of a set of relevant body parts over time. Using 3D poses provides a complete description of a movement because any joint motion can be unambiguously inferred and related to neural signals.

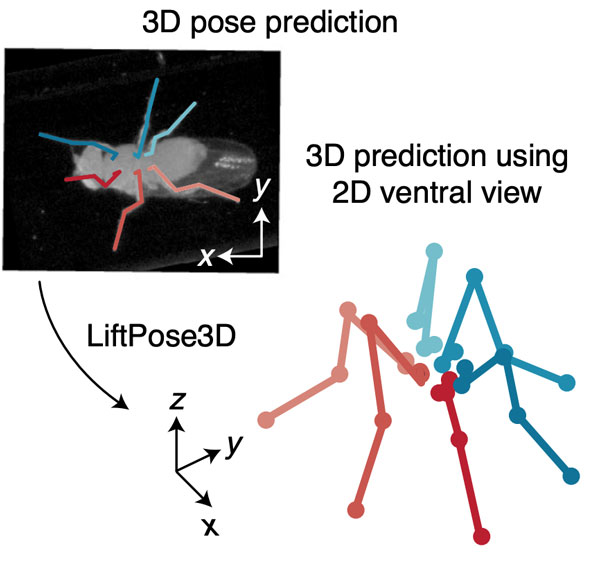

Figure: LiftPose3D uses deep learning to infer 3D poses from 2D poses derived from images

Despite the advantages of 3D poses, obtaining them required specialized hardware setups with multiple synchronized cameras to keep the moving animal in focus. This is a substantial challenge, particularly when studying animal behavior in naturalistic contexts. Therefore, many previous studies have used 2D poses or coarse metrics such as gait diagrams to study kinematic sequences. However, 3D poses are becoming increasingly relevant because they bridge the gap between the converging advances in biomechanics and robotics. Moreover, coarse metrics of kinematics do not allow the unambiguous inference of 3D poses and often lead to uncertainties in behavioral analyses. Our approach, LiftPose3D, the development of which was possible due to the generous timeframe of our HFSP Cross-Disciplinary Fellowship, surmounts the challenges associated with 3D pose estimation by reconstructing 3D poses directly from 2D poses.

At first, this may sound like a contradiction: how can 3D poses be reconstructed when multiple 3D poses can correspond to any 2D pose? This ill-defined problem is resolved by realizing that the pose repertoire of animals covers only a fraction of all possible configurations. Indeed, owing to the limited range of joint motion and the fact that animals seek efficient ways to coordinate their joints, the position of any limb is in a strict geometric relationship with other limbs. LiftPose3D uses a deep neural net to learn these spatial geometric relationships between key points using a 3D pose library of typically used poses.

LiftPose3D’s unique advantages lie in the data augmentation techniques it uses, which can overcome the challenges in training data often faced in laboratory studies associated with a wide range of animals, camera angles, experimental systems, and behaviors. Our study demonstrated in fruit flies and macaques (link to videos)that LiftPose3D's deep network can lift 3D poses from one camera without knowing its positioning. Consequently, no camera calibration is required making pre-trained LiftPose3D networks useful across laboratories and datasets. We also showed in fruit flies, mice and rats that our network can overcome occlusions and outliers in training data by learning geometric relationships between animal poses. Finally, we also found that when 3D pose information is not available then pre-trained networks in combination with domain adaptation can generalize networks across domains to lift previously unseen 3D poses.